Sans the semi-click-baity title, I genuinely want to open your eyes to a new strategy I take with my backups, whereas I don’t ever really think about schedules, swapping out destinations, differential vs incremental, or any other backup idioms most of us consider normal.

It’s 2019. Enter syncing. Across devices. Across operating systems. Over the network. Oh, and Free.

Buckets

Yep, just like AWS buckets, I have a logical representation for each content class, depending on its attributes and meaning to my overall life. One is Music, another Truehome, and another Games. They’re easy to understand, make sense to me, which is what matters most.

I consider each bucket a top-level object which looks the same across every device, gets treated as a single unit, and never represents more or less than it should. Additionally, devices are second class citizens. There is no Truehome-WD, Truehome-Network, or Truehome-Work.

For the rest of this article, I’ll be discussing Truehome.

Truehome

Truehome is the most important bucket I have. I ensure its replicated in at least 3 places (5 right now) with at least one being completely out of my locality. There’s also a mix of offline cold backups (although only specific to this bucket).

The reason for its importance? Truehome contains content I’ve made or collected relating to my life and the lives of people close to me. Some examples include family wedding cuts, pictures, repositories, school projects, personal development resources, and so much more. It’s actually under 300GB, mainly because anything older/classic is XZ compressed. But also, because I’ve been good about thinking what content really matters in my life, my families life, and the lives of my children (coming soon).

While I’m an archivist, Truehome definitely isn’t about archiving. If anything, its about historical significance, defining who I am, and how I’ve gotten where I am (whether good or bad). The other buckets I have usually contain contents specifically around my archival tendencies, a lot of which is “cool stuff” or “interesting snippets” which really don’t mean as much to me as my first Carnival cruise at 8 years old.

Google Drive

Stepping away for a moment from Truehome (we’ll get back to it), Google Drive is my authoritative destination for all in-flight data including documents, notes, PDFs, page saves, downloaded pictures, etc.. I’d say Google Drive is my modern home directory, synced across my machines: 1 Mac, 1 PC, and 1 Linux box. This enables me to interact with the same content on any device in addition to having the most important data on that local disk for fast and offline access.

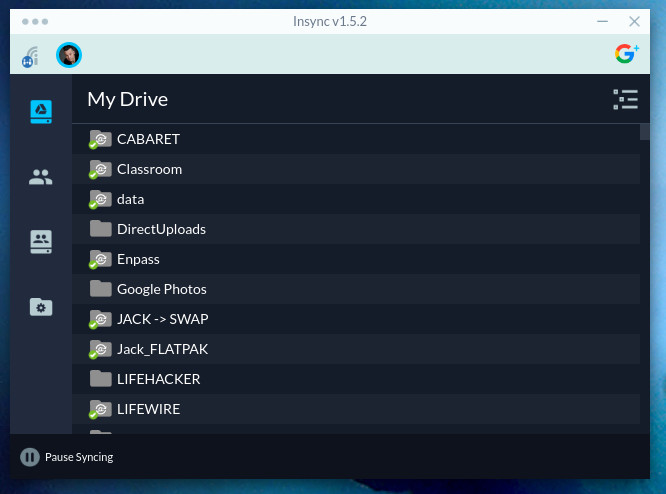

To do this, I use Insync, the multi-platform Google Drive syncing application. It works great, lets me configure every major aspect, and does everything I could ever need it to.

While Drive is great, Dropbox, Onedrive, and other great solutions exist (you can throw a rock to find them). Don’t look for features that don’t really mean that much to you. There is no perfect, but you can get really close with what’s out there. Half of my decision to use Drive is how much I like the simple, basic interface that doesn’t seem to use 9MB of Javascript.

Blending Them Together

This is the best part: My Google Drive is just a directory in Truehome. It’s synced right into Truehome via Insync, all running on my Raspberry Pi named Pius. See, Pius has a large external drive mounted which contains my authoritative copy of Truehome. In addition, it does the following:

- Syncs my entire Google Drive (excluding Photos) into Truehome

- Syncs my Github and Bitbucket Repositories, both Public and Private into Truehome

- Provides a local network share for me to browse Truehome from any machine

> When I say Sync, I mean keeping the contents up-to-date continuously from the source i.e. Github.

This Little Machine

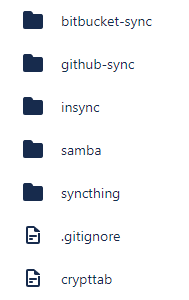

Pius does one thing and one thing only: Runs containers which sync data. It’s a basic Raspbian install with Docker, docker-compose, and some basic utilities like tmux. Like any single-node box I manage, I keep one single directory (which is a git repository) that holds host config (i.e. cloud-config) in addition to docker-compose.yaml files defining the applications I want running on that node. That’s it.

Let’s look at the Containers themselves, most of which I containerized:

docker-rpi-insync - Forked from Dave Conroy, I enhanced his Insync image to work on a Raspberry Pi and made it a bit more headless.

docker-rpi-ghbackup - Using the popular ghbackup tool, I made a simple rpi containerized version. This takes care of syncing down my github repos (at timed intervals it contacts Github looking for changes).

docker-rpi-bbbackup - Using my favorite Bitbucket backup script, I containerized it to run just like ghbackup above.

docker-rpi-samba - A simple samba file sharing container meant to merely serve directories over the network. Super useful and easy to setup!

For each of these, there’s a docker-compose example you can use to see the optimal way to configure and execute these containers.

Wait, so one External Hard Drive?

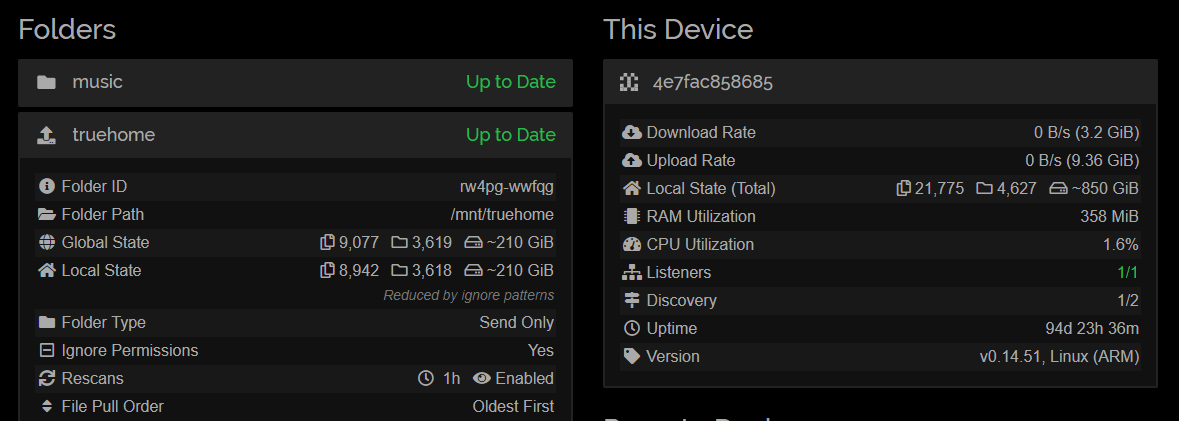

Nope. Welcome to Syncthing! Syncthing has quickly became my favorite piece of FOSS because it takes something complex and makes it beautifully simple. It still lets you get down and dirty, giving you the knobs to hurt yourself or do whatever insane thing you can think of. However, when you tell it what you want, it always makes the best effort to get you there, especially being kind to your precious data.

With Syncthing, Pius maintains the authoritative source of truth syncing out to my Raid-6 remote server and over to my beefy Desktop in the other room. The best part: So long as one node can allow incoming connections to its Syncthing, Pius can stay internal to my home network without any external access. Additionally, each item I “share” in Syncthing is a bucket: Truehome, Music, Games, etc.. Things like priority, versioning, and safe deletion are available providing a powerful UI that doesn’t even make me cringe! I can’t recommend a set-it and forget-it piece of software that’s actually put a smile on my face as much as Syncthing!

Oh, and of course, its containerized!

The Ties that Bind

While this has been a long article, this entire setup is quite simplistic: Sync changing data to an authoritative place, then sync the entirety of that data to other devices entirely. It definitely took some upfront work containerizing and structuring the data, but I hardly ever look-in on it.

By using some basic tools, containers, and a low-cost computer, I’ve easily enabled replication with peace of mind. While I didn’t dive into deeper things like the storage on my replicated destinations, or “what happens if something explodes”, this setup has gotten me 95% to where I’ve wanted to be: A low-effort and low-cost continuously in-sync storage solution for my most critical data.

Thanks for coming on this ride with me! Hopefully this at least gives you some idea into how you can better have some cake (your data) and eat it to (redundancy without the PITA)!